Hypotheses

Not only in Equivalence

Helmut Schütz

September 19, 2023

Consider allowing JavaScript. Otherwise, you have to be proficient in

reading ![]() since formulas

will not be rendered. Furthermore, the table of contents in the left

column for navigation will not be available. Sorry for the

inconvenience.

since formulas

will not be rendered. Furthermore, the table of contents in the left

column for navigation will not be available. Sorry for the

inconvenience.

- The right-hand badges give the respective section’s ‘level’.

- Basics requiring no or only limited statistical expertise.

- These sections are the most important ones. They are – hopefully – easily comprehensible even for novices.

- A somewhat higher knowledge of statistics is required. May be skipped or reserved for a later reading.

| Abbreviation | Meaning |

|---|---|

| \(\small{\alpha}\) | Nominal level of a test, probability of Type I Error, patient’s risk |

| \(\small{\beta}\) | Probability of Type II Error, producer’s risk |

| BE | Bioequivalence |

| CI, CL | Confidence Interval, Confidence Limit |

| \(\small{\delta}\) | Margin of clinical relevance in Non-Inferiority and Non-Superiority |

| \(\small{\Delta}\) | Clinical relevant difference in BE |

| \(\small{H_0}\) | Null hypothesis |

| \(\small{H_1}\) | Alternative hypothesis (also \(\small{H_\textrm{a}}\)) |

| \(\small{\mu_\text{T},\,\mu_\text{R}}\) | True mean of the Test and Reference treatment, respectively |

| \(\small{\pi}\) | Prospective power (\(\small{1-\beta}\)) |

| TOST | Two One-Sided Tests |

Introduction

What are (statistical) hypotheses?

Before we dive into the matter, we must distinguish between believe systems1 2 3 and science.

Following the concepts of Karl R. Popper for a given problem we can only state a working hypothesis, which – based on assumptions – has to be formalized in such a way that it can be falsified with accessible methodology. The purpose of falsifiability is to make a theory – consisting of a set of hypotheses – predictive and testable, thus useful in practice.4

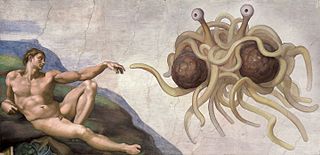

Thus, Russell’s teapot, the Invisible Pink Unicorn, and the Flying Spaghetti Monster do not qualify as working hypotheses.

As long as a working hypothesis awaits to be falsified, it remains tentatively accepted. Once falsified, another problem arises and – hopefully – we are able to develop a new working hypothesis, which is consistent with previous observations. The progress of scientific knowledge can be seen as an evolutionary process.5

“The game of science is, in principle, without end. He who decides one day that scientific statements do not call for any further test, and that they can be regarded as finally verified, retires from the game.

“It is a good morning exercise for a research scientist to discard a pet hypothesis every day before breakfast. It keeps him young.

“Whenever a theory appears to you as the only possible one, take this as a sign that you have neither understood the theory nor the problem which it was intended to solve.

“Truth in science can be defined as the working hypothesis best suited to open the way to the next better one.

Let us start with an example of the justice system, which – going back to Roman Law10 – presumes that the defendant is not guilty.11 After weighing the arguments of the attorney and the defendant’s advocate, the jury has to arrive with a verdict.

| Verdict | Defendant innocent | Defendant guilty |

|---|---|---|

| Presumption of innocence rejected (considered guilty) | Misjudgment | Correct |

| Presumption of innocence accepted (considered not guilty) | Correct | Misjudgment |

We could formalize the process of reaching a verdict in the justice system in such a way that we specify two hypotheses, the so-called null hypothesis \(\small{H_0}\) (the defendant is innocent) and the alternative hypothesis \(\small{H_1}\) (the defendant is guilty). All formal decisions are subjected to two ‘Types’ of Error, namely

- α, which is the probability of a Type I Error (a.k.a. Risk Type I, error of the first kind) and

- β, which is the probability of a Type II Error (a.k.a. Risk Type II, error of the second kind).

This would translate into the following decisions and their associated errors:

| Decision | \(\small{H_0}\) true | \(\small{H_0}\) false |

|---|---|---|

| \(\small{H_0}\) rejected | \(\small{\alpha}\) | \(\small{H_1}\) accepted |

| failed to reject \(\small{H_0}\) | \(\small{H_0}\) accepted | \(\small{\beta}\) |

Of course, we desire to keep both errors as small as possible. Already the Romans were aware of potential misjudgments and considered it more important to avoid sentencing innocents rather than setting culprits free. Hence, the justice system of democracies opt for \(\small{\alpha<\beta}\), whereas the one in authoritarianism for \(\small{\alpha\gg\beta}\).

Types

Equivalence

In inferential

statistics of an equivalence trial the null hypothesis \(\small{H_0}\) is inequivalence, which we aim to reject

(i.e., accept the alternative hypothesis \(\small{H_1}\) of equivalence

instead).

In bioequivalence (BE) specifically:

| Decision | \(\small{H_0}\) true | \(\small{H_0}\) false |

|---|---|---|

| \(\small{H_0}\) rejected | \(\small{\alpha}\) (Patient’s risk) | correct (BE demonstrated) |

| failed to reject \(\small{H_0}\) | correct (not BE) | \(\small{\beta}\) (Producer’s risk) |

\(\small{\alpha}\) is fixed by the agency (commonly \(\small{\leq0.05}\)) and \(\small{\beta}\) is set in sample size estimation of the study. Decreasing \(\small{\alpha}\), \(\small{\beta}\) (or both of them) increases the sample size.12

We can make four decisions.

Two of them can be right

- The treatments are in fact equivalent and the study

passes:

The Confidence Interval (CI) lies entirely within the pre-specified limits. - The treatments are in fact not equivalent and the study

fails:

At least one of the Confidence Limits lies outside the pre-specified limits.

Two of them can be wrong

- If we declare equivalence – although treatments are not equivalent – patients will be treated with a product which possesses problems. Either the effect will be insufficient or the rate of adverse events not acceptable. The chance (i.e., the patient’s risk) that this will happen is \(\small{\alpha}\).

- If we fail to show equivalence – although the treatments are equivalent – the product will not be approved and money lost. The chance (i.e., the producer’s risk \(\small{\beta}\)) that this will happen is \(\small{\beta=1-\pi}\), where \(\small{\pi}\) is the power of the study.13

Hence, it is in the interest of the sponsor to plan for high

power (\(\small{\pi=1-\beta}\), where

\(\small{\beta}\) is the producer’s

risk). If power is too low (e.g., < 0.70) or too high

(e.g., > 0.95) there might be ethical concerns

(i.e., the study protocol may not be accepted by the

IEC /

IRB). There are no hard

limits for power but 0.80 – 0.90 is common.14 15

More general:

“The number of subjects in a clinical trial should always be large enough to provide a reliable answer to the questions addressed.

Two points are important.

- From a regulatory perspective the outcome of a comparative

bioavailability study is dichotomous:

Either BE was demonstrated (the CI lies entirely within the pre-specified acceptance range) or not.- The patient’s risk has to be controlled (\(\small{\alpha\leq0.05}\)).

- Post hoc power does not play any role in this decision,

i.e., the agency should not even ask for it (since \(\small{\beta}\) is independent from

\(\small{\alpha}\)).17

Realization: Observations (in a sample) of a random variable (of the population).The study was planned for a certain prospective power based on assumptions. If they were not not exactly realized in the study – which is very likely:- If the test deviated more than assumed from the reference treatment and/or the variability was higher than assumed and/or the dropout-rate was higher than anticipated, the risk of the sponsor to fail increased (the study was ‘underpowered’).

- If the test deviated less than assumed from the reference treatment and/or the variability was lower than assumed and/or the dropout-rate was lower than anticipated, the risk of the sponsor to fail decreased (the study was ‘overpowered’).

- Only if the study failed but was indecisive (at least one confidence limit outside the acceptance range) whilst the point estimate was within, it may be interesting for the sponsor to assess post hoc power in order to decide whether it may make sense to repeat the study in a larger sample size without re-formulation. Although generally accepted by agencies, there might be problems with an inflated Type I Error.19

An ‘optimal’ study design is one, which – taking all assumptions into account – has a reasonably high chance (power) of demonstrating equivalence whilst controlling the patient’s risk.

“Ask ten physicians what bioequivalence is and eleven get it wrong.

Non-Inferiority, -Superiority

Contrary to BE, where a study is

assessed with \(\small{\alpha=0.05}\)

in TOST (or by a \(\small{100\,(1-2\times0.05)}\) Confidence

Interval), in Non-Inferiority and Non-Superiority a single one-sided

test with \(\small{\alpha=0.025}\) is

employed.

Based on a ‘clinically relevant margin’ \(\small{\delta}\) we have different

hypotheses.

Non-Inferiority

We assume that higher responses are better. If data follow a lognormal distribution the hypotheses are \[H_0:\frac{\mu_\text{T}}{\mu_\text{R}}\leq \log_{e}\delta\;vs\;H_1:\frac{\mu_\text{T}}{\mu_\text{R}}>\log_{e}\delta\tag{1a}\] If data follow a normal distribution the hypotheses are \[H_0:\mu_\text{T}-\mu_\text{R}\leq \delta\;vs\;H_1:\mu_\text{T}-\mu_\text{R}>\delta\tag{1b}\]

Applications

- Clinical phase III trials comparing a new treatment with placebo or an established treatment (efficacy).

- Comparing minimum concentrations of a new Modified Release (MR) formulation with the ones of an approved Immediate Release (IR) formulation as a surrogate of efficacy.25

Non-Superiority

We assume that lower responses are better. If data follow a lognormal distribution the hypotheses are \[H_0:\frac{\mu_\text{T}}{\mu_\text{R}}\geq \log_{e}\delta\;vs\;H_1:\frac{\mu_\text{T}}{\mu_\text{R}}<\log_{e}\delta\tag{2a}\] If data follow a normal distribution the hypotheses are \[H_0:\mu_\text{T}-\mu_\text{R}\geq \delta\;vs\;H_1:\mu_\text{T}-\mu_\text{R}<\delta\tag{2b}\]

Applications

- Clinical phase III trials comparing AEs of a new treatment with placebo or an established treatment (safety).

- Comparing maximum concentrations of a new MR formulation with the ones of an approved IR formulation as a surrogate of safety.23

Misconceptions

It is a common

fallacy to regard the \(\small{p}\)-value of a statistical

significance test as the probability that the null hypothesis \(\small{H_0}\) is true (or the

alternative hypothesis \(\small{H_1}\)

is false).26 27 In frequentist

statistics the outcome of any level \(\small{\alpha}\)-test is dichotomous,

i.e., hypotheses are considered true or false, not something

that can be represented with a probability.

In simple words: If a test is performed at \(\small{\alpha=0.05}\), a resulting \(\small{p=0.0001}\) is not more informative

than \(\small{p=0.0499}\). In both

cases the null hypothesis is rejected – nothing more.

Examples

- Testing for unequal carry-over (aka a

sequence-effect) or for a Group-by-Treatment interaction.

At a recent BE workshop28 I had a conversation with a prominent member of the FDA. She confirmed that at the agency the G × T interaction is tested at the 0.05 level. But then she also said

»We often see not only p-values just below 0.05, but also some with 0.0001 and lower…«She fell into the trap. - Testing for equal variances in parallel groups (e.g., by the F-test or Levene’s test).

Similarly in comparative BA: A narrow confidence interval of one test product does not imply that it is ‘more equivalent’ than an other with a wide CI.

History of Bioequivalence

In the early 1980s originators failed in trying to falsify the concept (i.e., comparing Bioequivalence in healthy volunteers to large Therapeutic Equivalence studies in patients): If BE passed, TE passed as well and vice versa.

If they would have succeeded (BE passed while TE failed), generic companies would have to demonstrate TE in order to get products approved. Such studies would have to be much larger than the originators’ Phase III studies making them economically infeasible.29 Essentially, that would have meant the end of the generic industry.

However, comparative BA is also

used by originators in scale-up of formulations used in Phase III to the

to-be-marketed formulation, supporting post-approval changes, in line

extensions of approved products, and for testing of drug-drug

interactions or food effects.

Hence, about 30% of all BE trials

are performed by originators. If they had been successful to refute the

concept, they would have shot into their own foot.

Assumptions

We must keep in mind that the acceptance range in bioequivalence is based on a ‘clinically relevant difference’ \(\small{\Delta}\), i.e., for data following a lognormal distribution \[\left\{\theta_1,\theta_2\right\}=\left\{100\,(1-\Delta),100\,(1-\Delta)^{-1}\right\}\tag{3}\] The commonly assumed \(\small{\Delta=20\%}\)30 leading to \(\small\left\{80.00\%,125.00\%\right\}\) is arbitrary (as any other).

In general detecting a 20% difference of exogenous drugs is not an

issue.

However, endogenous compounds can be problematic. Before

BE is attempted, it has to be shown

that the administered dose is sensitive enough to detect such a

difference. Sometimes not even the highest tolerated supra-therapeutic

dose results in levels substantially above the baseline. An example is

gastric-resistant sodium hydrogencarbonate. Due to homeostasis and the

large buffer capacity of blood, it is practically impossible to

demonstrate BE.

Bioequivalence was never a scientific concept in the Popperian sense but an ad hoc solution to a pressing problem in the 1970s.31 32 Nevertheless, apart from occasional anecdotal reports33 (mainly dealing with narrow therapeutic index drugs), no problems are evident switching between the originator and generics (and vice versa) in terms of lack of efficacy or compromised safety, thus providing decades of empiric evidence that the approach is sufficient in practice.

Other assumptions are involved, namely in designing the study

- the deviation of the test from the reference treatment and

- the variance (commonly expressed as a coefficient of variation).

Both are uncertain and unknown in advance.

Furthermore, we assume that similar concentrations in the circulation result in a similar effect and hence, we can extrapolate from a study in healthy subjects to the patient population.

“What is the justification for studying bioequivalence in healthy volunteers?

“Variability is the enemy of therapeutics” and is also the enemy of bioequivalence. We are trying to determine if two dosage forms of the same drug behave similarly. Therefore we want to keep any other variability not due to the dosage forms at a minimum. We choose the least variable “test tube”, that is, a healthy volunteer.

Disease states can definitely change bioavailability, but we are testing for bioequivalence, not bioavailability. “[…] the studies should normally be performed in healthy volunteers unless the drug carries safety concerns that make this unethical. This model, in vivo healthy volunteers, is regarded as adequate in most instances to detect formulation differences and to allow extrapolation of the results to populations for which the reference medicinal product is approved (the elderly, children, patients with renal or liver impairment, etc.).

“In order to reduce variability not related to differences between products, the studies should normally be performed in healthy subjects unless the drug carries safety concerns that make this approach unethical. Conducting BE studies in healthy subjects is regarded as adequate in most instances to detect formulation differences and to allow extrapolation of the results to populations for which the product is intended.

It might not be obvious from the quotes but Bioequivalence (generally based on PK, sometimes on PD) is considered a surrogate for Therapeutic Equivalence.

“[…] all models are approximations.

Essentially, all models are wrong, but some are useful.

Is bioequivalence science in the strict sense? No. Is it useful? Yes, indeed.

“Bottom Line

No prospective study has ever found that an FDA approved generic product does not show the same clinical efficacy and safety as the innovator product, even when special populations (e.g., elderly, women, severely sick patients) are studied.

A Black Swan ?

Did we discover the first Black Swan in 2017?

Following concern by the French competent autority

ANSM

in 2012 about stability issues, the originator (Merck KGaA) changed one

of the excipients of their levothyroxine sodium formulation

Lévothyrox, namely from lactose to mannitol and citric acid.

BE was demonstrated in a 2×2×2

crossover study in healthy subjects38 (204 completers) with pre-specified

limits of 90.00 – 111.11% for AUC and Cmax

(i.e., \(\small{\Delta\;10\%}\) according to the

EMA’s requirement for

NTIDs). Both the new

and old formulations were administered as a single – supra-therapeutic –

600 µg dose. PK metrics were

adjusted for the baseline.

The PE of

AUC0–72 was 99.3% (90%

CI: 95.6 – 103.2%) and the one

of Cmax 101.7% (98.8 – 104.6%), both easily

fulfilling the requirements.

Neither physicians nor patients were aware of what was going on –

that wasn’t a product-switch but a change in the formulation. Both

thought to continue using the same product…

In the first three months after the new formulation’s release to the

French market, Adverse Events surged (fatigue / asthenia, headache,

insomnia, vertigo, depression, joint and muscle pain, alopecia). Within

24 days the

ANSM

received 9,000 (‼) AE-reports. The

French Minister of Health adressed patients’ concerns on September

15th by asking Merck to reintroduce the previous formulation

within 15 days, on a temporary basis and under medical prescription –

only for patients with persistent and undesirable side effects.39 40 An

analysis of 5,062 patient complaints by the

ANSM

concluded that while »thyroid imbalances can occur for some patients

during the transition« between the old and new formulations, the

reported symptoms »are in line with those experienced with the

previous formulation. No new type of adverse event related to the new

formulation was found«.41

Possibly the study protocol should have been rejected by the IEC / IRB. For a conservative T/R-ratio of 97.5% and CV 25% targeted at 90% power, only 164 subjects would have been sufficient and not 216 as in the study (target power 96%). However, with the observed PEs and CVs the study would have passed with such a smaller sample size as well.

Did we really discover a Black Swan, i.e., was for

this NTID the assumed

\(\small{\Delta\;10\%}\) not strict

enough?

Not necessarily. Likely just the Type I Error \(\small{\alpha}\) fired back – as

potentially in any other study. In the current product-specific guidance

levothyroxine is considered a critical dose drug and the acceptance

limits are given with 90.00 – 111.11% for AUC0–72

and 80.00 – 125.00% for Cmax.42

top of section ↩︎ previous section ↩︎

Licenses

Helmut Schütz 2023

pandoc GPL 2.0.

1st version March 29, 2021. Rendered September 19, 2023 21:26

CEST by rmarkdown via pandoc in 0.04 seconds.

Footnotes and References

Quoting my late father: »If you believe, go to church.«↩︎

Anecdotal reports like

»My uncle Tom told me that snake oil cured the anacousia of his friend John’s grandnice’s spouse in just a couple of days.«

The plural of anecdote is not data. — Roger Brinner↩︎Homeopathy, astrology, palm reading, tasseomancy, the MMR vaccine causing autism and 5 GHz Wi-Fi the COVID-19 pandemic, Bill Gates injecting microchips, you name it.↩︎

Contrary to believe systems – following dogma – ‘truth’ does not belong to the realm of science. Therefore, nothing can be ‘proved’ in science.

Nobody understands what gravity really is. Still, Newton’s \(\small{F=G\frac{m_1\,\cdot\,m_2}{r^2}}\) is useful over wide scales. Only if large masses are involved, we need Einstein’s \(\small{G_{\mu\nu}=\frac{8\,\pi\,\cdot\,G}{c^4} T_{\mu\nu}}\).↩︎Fundamentally opposite to pseudoscience, which is dogmatic, a scientist will always be happy if a hypothesis is falsified or an error in its assumptions discovered.↩︎

Popper KR. The Logic of Scientific Discovery. Ch. 2, Section XI: Methodological Rules as Conventions. London: Hutchinson; 1959.↩︎

Lorenz K. On Agression. London: Methuen Publishing; 1966.↩︎

Popper KR. Objective Knowledge. An Evolutionary Approach. Oxford: Oxford University Press; Revised Ed. 1972.↩︎

Quoted in: Reif F, Larkin JH. Cognition in Scientific and Everyday Domains: Comparison and Learning Implications. J Res Sci Teach. 1991; 28(9): 733–60. doi:10.1002/tea.3660280904.↩︎

Code of Justinian. 534. »Ei incumbit probatio qui dicit, non qui negat.« [Proof lies on him who asserts, not on him who denies.]↩︎

UN Department of Public Information. Universal Declaration of Human Rights. Article 11.1. Online.↩︎

In the aviation industry an extremely small \(\small{\alpha}\) is applied in testing critical components. We don’t want to risk falling aircraft from the sky. Similar in the automotive industry. Would you ever drive a car with a risk of 5% that the brakes fail?↩︎

If we plan all studies for 80% power, treatments are equivalent, and all assumptions are exactly realized, one out of five studies will fail by pure chance.

Science is a cruel mistress.↩︎FDA, CDER. Guidance for Industry. Statistical Approaches to Establishing Bioequivalence. APPENDIX C. Rockville. January 2001. Download.↩︎

FDA, CDER. Draft Guidance for Industry. Statistical Approaches to Establishing Bioequivalence. Silver Spring. December 2022. Download.↩︎

ICH. Statistical Principles for Clinical Trials. E9. 1998. Online.↩︎

According to the WHO:

»The a posteriori power of the study does not need to be calculated. The power of interest is that calculated before the study is conducted to ensure that the adequate sample size has been selected. […] The relevant power is the power to show equivalence within the pre-defined acceptance range.«↩︎Hoenig JM, Heisey DM. The Abuse of Power: The Pervasive Fallacy of Power Calculations for Data Analysis. Am Stat. 2001; 55(1): 19–24. doi:10.1198/000313001300339897.

Open Access.↩︎

Fuglsang A. Pilot and Repeat Trials as Development Tools Associated with Demonstration of Bioequivalence. AAPS J. 2015; 17(3): 678–83. doi:10.1208/s12248-015-9744-6.

Free

Full Text.↩︎

Free

Full Text.↩︎Panel Discussion at: The Global Bioequivalence Harmonization Initiative: EUFEPS/AAPS 2nd International Workshop. Rockville. 15–16 September 2016.↩︎

I confess to use it myself sometimes.↩︎

I know one regulator of Poland’s agency rejecting study protocols with ‘bioequivalence’ in the title. He uses to write: »If you know already that products are bioequivalent, why do you want to perform a study?«↩︎

Health Canada. Guidance Document. Conduct and Analysis of Comparative Bioavailability Studies. Ottawa. Adopted 2012/02/08, Revised 2018/06/08. Online.↩︎

Health Canada. Guidance Document. Comparative Bioavailability Standards: Formulations Used for Systemic Effects. Ottawa. Adopted 2012/02/08, Revised 2018/06/08. Online.↩︎

EMA, CHMP. Guideline on the pharmacokinetic and clinical evaluation of modified release dosage forms. London. 20 November 2014. Online.↩︎

Vidgen B, Yasseri T. P-Values: Misunderstood and Misused. Frontiers in Physics. 4(6): Article 6. doi:10.3389/fphy.2016.00006.

Open Access.↩︎

Wasserstein RL, Lazar NA. The ASA’s Statement on p-Values: Context, Process, and Purpose. Am Stat. 2016; 70(2): 129–33. doi:10.1080/00031305.2016.1154108.

Open Access.↩︎

Medicines for Europe. 2nd Bioequivalence Workshop. Session 2 – ICH M13 – Bioequivalence for IR solid oral dosage forms. Brussels. 26 April 2023.↩︎

In Phase III we try to demonstrate that verum performs ‘better’ than placebo, i.e., one-sided tests for non-inferiority (effect) and non-superiority (adverse reactions). Such studies are already large: Approving statins and COVID-19 vaccines required ten thousands volunteers. Can you imagine how many it would need to detect a 20% difference between two treatments?↩︎

Where does it come from? Two stories:

Les Benet told that there was a poll at the FDA and – essentially based on gut feeling – the 20% saw the light of day.

I’ve heard another one, which I like more. Wilfred J. Westlake, one of the pioneers of BE was a statistician at SKF. During a coffee and cig break (everybody was smoking in the 1970s) he asked his fellows of the clinical pharmacology department »Which difference in blood concentrations do you consider relevant?« Yep, the 20% were born.↩︎Levy G, Gibaldi M. Bioavailability of Drugs. Circulation. 1974; 49: 391–4. doi:10.1161/01.CIR.49.3.391.↩︎

Skelly JP. A History of Biopharmaceutics in the Food and Drug Administration 1968–1993. AAPS J. 2010; 12(1): 44–50. doi:10.1208/s12248-009-9154-8.

Free

Full Text.↩︎

Free

Full Text.↩︎The few case reports telling the opposite might as well be explained by non-compliance or the nocebo effect.↩︎

Benet LZ. Why Do Bioequivalence Studies in Healthy Volunteers? 1st MENA Regulatory Conference on Bioequivalence, Biowaivers, Bioanalysis and Dissolution. Amman. 23 September 2013. Online.↩︎

EMA, CHMP. Guideline on the Investigation of Bioequivalence. London. 20 January 2010. Online.↩︎

ICH. Bioequivalence for Immediate Release Solid Oral Dosage Forms. M13A. Draft version. 20 December 2022. Online.↩︎

Box GEP, Draper NR. Empirical Model-Building and Response Surfaces. New York: Wiley; 1987. p. 424.↩︎

Gottwald-Hostalek U, Uhl W, Wolna P, Kahaly GJ. New levothyroxine formulation meeting 95–105% specification over the whole shelf-life: results from two pharmacokinetic trials. Curr Med Res Opin. 2017; 33(2): 169–74. doi:10.1080/03007995.2016.1246434.↩︎

Macdonald G. French police visit Merck KGaA Lyon plant as part of levothyrox probe. Outsourcing-Pharma. 04 Oct 2017, updated 20 Dec 2017. Online.↩︎

Melville NA. Side Effects Skyrocket in France With Levothyroxine Reformulation. Medscape. October 11, 2017. Online.↩︎

ANSM. Point d’actualité sur le Levothyrox et les autres médicaments à base de lévothyroxine: Les nouveaux résultats de l’enquête nationale de pharmacovigilance confirment les premiers résultats publiés le 10 octobre 2017 – Communiqué. Posted 30/01/2018, updated 19/03/2021. Online. [French]↩︎

EMA, CHMP. Levothyroxine tablets 12.5 mcg, 25 mcg, 50 mcg, 75 mcg, 100 mcg and 200 mcg (and additional strengths within the range) product-specific bioequivalence guidance. Amsterdam. 10 December 2020. Online.↩︎